Amazon SageMaker is a machine learning (ML) workflow service for developing, training, and deploying models, lowering the cost of building solutions, and increasing the productivity of data.

Sagemaker uses docker containers for training and deploying machine learning algorithms to provide a consistent experience by packaging all the code and run time libraries needed by the algorithm within the container.

There are three methods of training and inferencing using Amazon SageMaker.

- Prebuilt SageMaker Docker images

SageMaker comes with a few common machine learning frameworks packaged in a container. We can use these images on SageMaker notebook instance or SageMaker Studio.

- Modifying existing Docker Container and deploy in SageMaker

We can modify an existing Docker image to be compatible with SageMaker. This method can be used if the features or requirements are not currently supported by a prebuilt SageMaker image.

- Create a container with own algorithms and models

If none of the existing SageMaker containers meet the needs and do not have an existing container of our own, we may need to create a new Docker container with our training and inference algorithms for use with SageMaker.

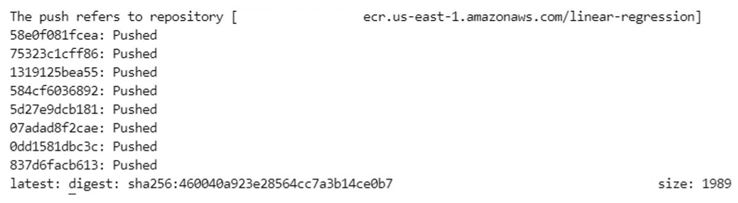

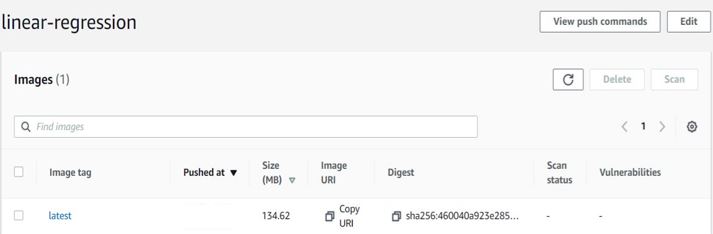

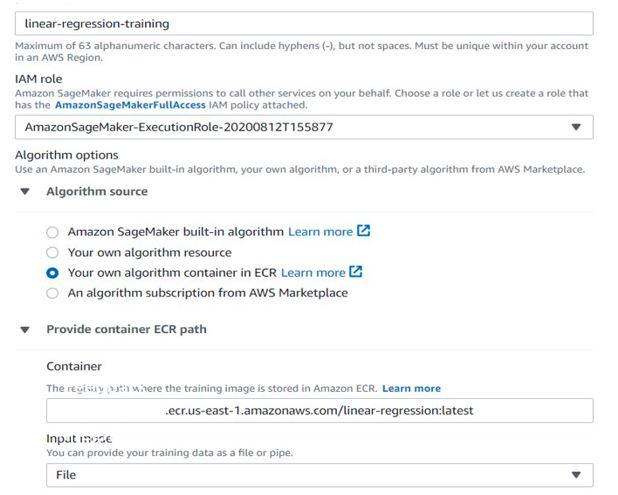

Amazon SageMaker comes with many predefined algorithms, but there might be requirements to use custom algorithms for hosting and inferencing using a managed solution. In Amazon SageMaker, we can provide custom Container images in ECR (Elastic Container Registry) for the training code and inference code, or you can combine them into a single Docker image. In our use case, we wanted to build a single image to support both training and hosting in Amazon SageMaker.

In this blog, we will create our own container and import our custom Scikit-Learn model onto the container and host, train, and inference in Amazon SageMaker.

Creating custom container

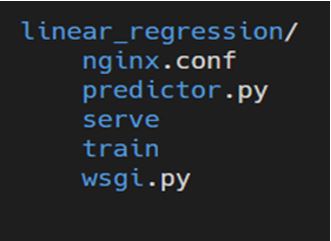

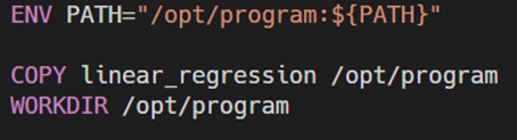

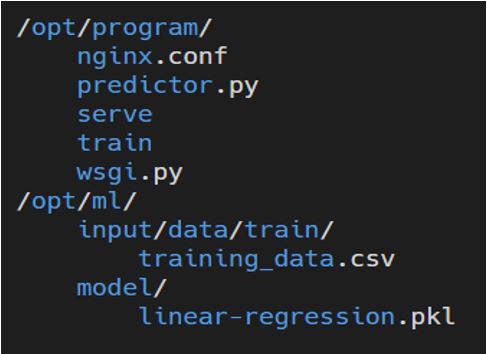

Amazon Sagemaker expects both training file (should be named ‘train’) and serving file (should be named ‘serve’) scripts along with the configuration files under the ‘/opt/program’ directory. The training script can be written in any language capable of running inside a docker container, our preferred language of choice was python. For inference, we used Nginx and Flask to create a RESTful microservice to serve HTTP requests for inference. Below is the directory structure of our container.